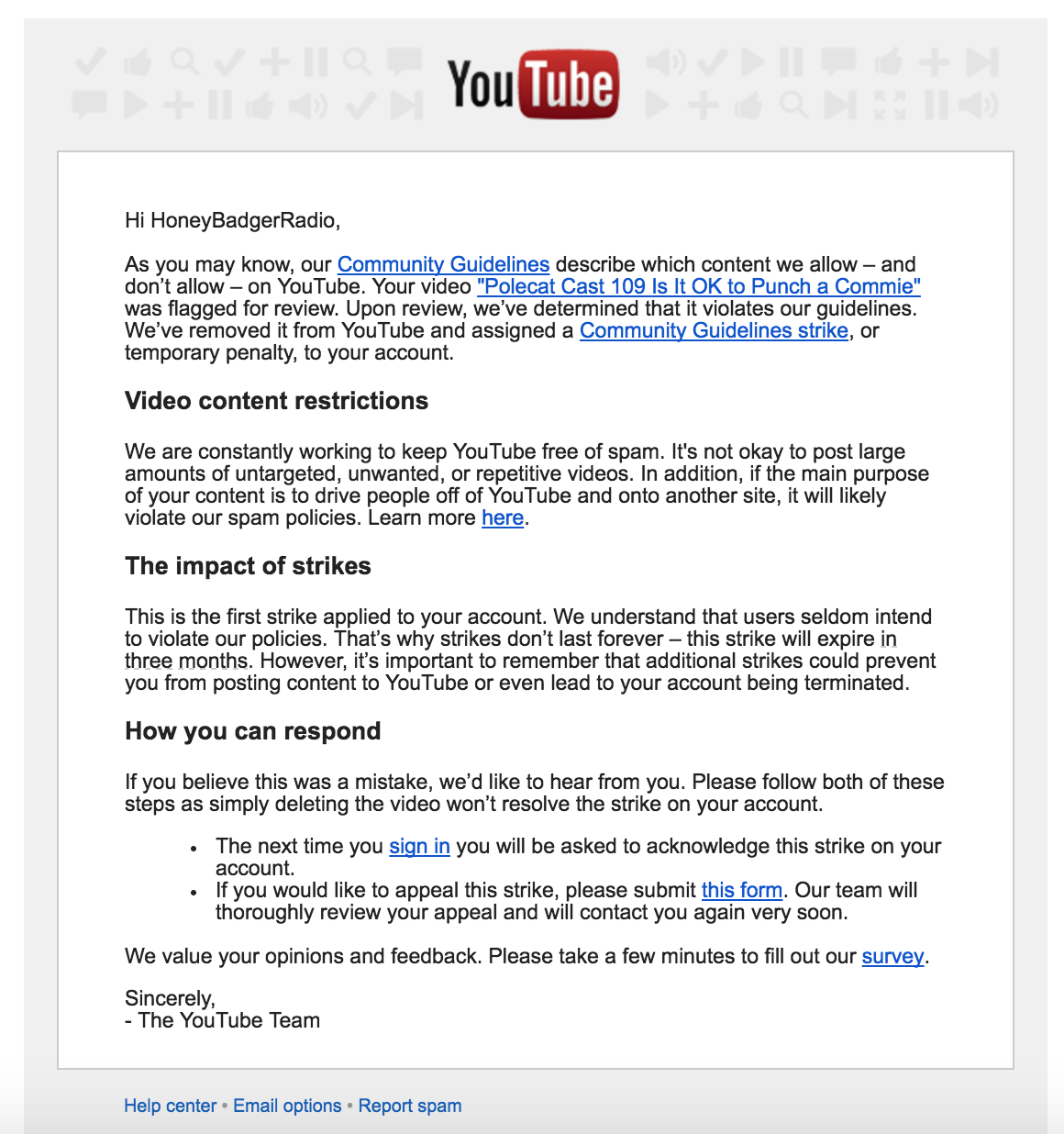

On April 19th, Honey Badger Radio received an email from youtube with the following message:

According to youtube’s help forum:

Community Guidelines strikes are issued when our reviewers are notified of a violation of the Community Guidelines. This includes but is not limited to videos that contain nudity or sexual content, violent or graphic content, harmful or dangerous content, hateful content, threats, spam, misleading metadata, or scams.

No information identifying the specific reason for the strike was given, only “community guidelines.” This is how we were alerted that our April 18 hangout had been flagged by someone, reviewed, and the strike had been applied upon review. The appeal was worded with the assumption that the title had been “mistaken” by someone for advocating violence.

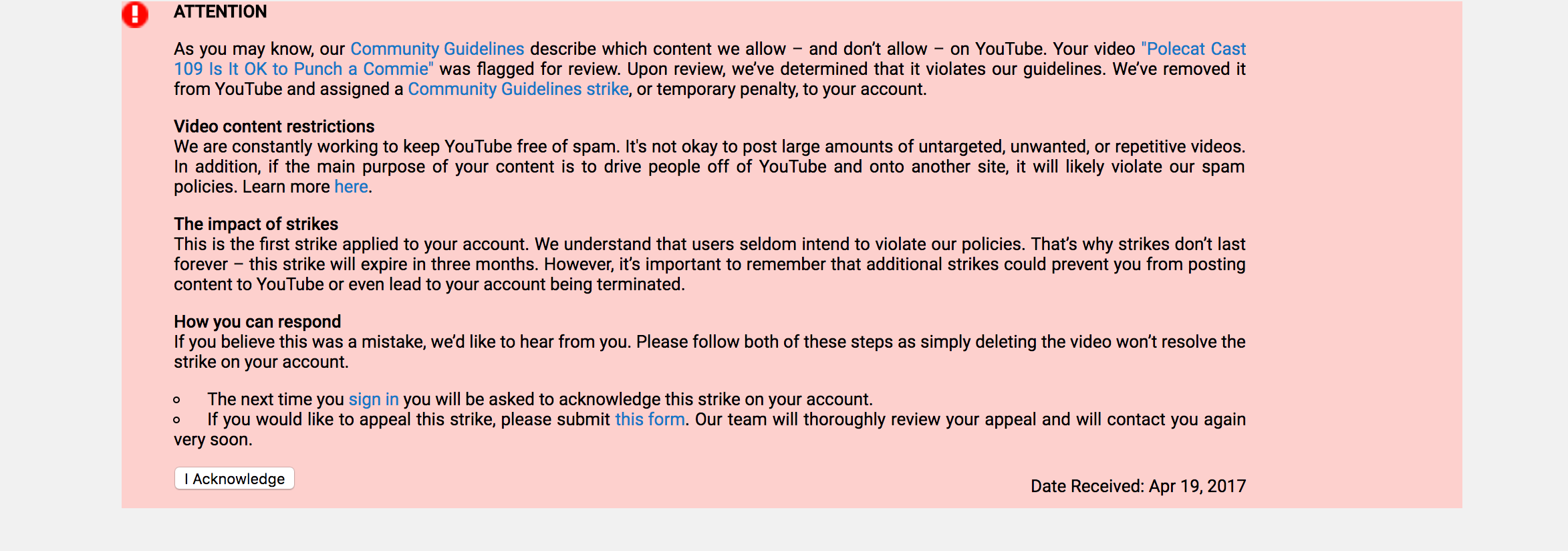

Upon submitting the appeal form, Alison logged into the Honey Badger Radio account. Before Youtube’s system would allow her access, she had to accept notification of the strike. It was in the text of that notification that we were informed that the strike was actually for the video being flagged as spam, a label which made no sense. We really couldn’t figure out any part of the hangout that would have led to a spam report being upheld upon any review.

This was even more far-fetched than thinking someone took the title as serious advocacy for violence. According to the description in youtube’s help system, content is considered spam if

- its views, likes, comments, or other metric are artificially increased either through the use of automatic systems or by by forcing or tricking viewers into watching videos (example, a link that appears to lead to something else links to the video.)

- the title, description, tags, annotations, or thumbnail are deemed misleading. Misleading thumbnails is mentioned twice in the post.

- Its content is a scam for financial gain

- It was uploaded for blackmail or extortion purposes

Due to the confusion, we contacted support to ask specifically what segment (or other part) of the stream was being called spam, and what characteristic of it qualified it as spam rather than content.

Support referred us back to the specialist responsible for reviewing the video, but asking that question may have led to a closer look at the content than the initial review had involved.

On April 20, we received another communication from support informing us that the strike had been dropped. While having the strike dropped is a good thing, what it confirms is not. A false flag, one that is obviously spurious, can get upheld by youtube’s initial review.

Without more information, speculation as to the reason why the initial review is not identifying and overturning false flags would be an unproductive waste of time.

That said, it would be reasonable to never assume that the reason a strike has been initially upheld is that it was legitimate. Based on the fact that an obviously false report can lead to a strike, it’s even reasonable to presume the initial review a waste of time and appeal whenever a strike is received, along with using the site’s support forum to contact staff with questions about the nature of the strike and why it was applied. It would also help to share anything you learn from your experience with youtube’s review system. If you have had an experience with false flagging or DMCA and have published an article, blog post, or video about it, please comment on this story with a link to your description of your experience.

If we can confirm even through such anecdotal evidence that false flagging is a common issue with youtube, that may be enough to make a suggestion that the site’s administrators take measures to stem deliberate false reporting using a strike and penalty system similar to that used against content creators who do violate youtube’s policies.

I’ve seen other sites do this in response to users abusing reporting systems for terms of service violations. Why? Vexatious abuse of terms of service violation reporting places an undue burden on staff, and if left unchecked, can become expensive by creating a demand for more employee time on the clock. It can also make it harder for staff to identify and handle actual TOS violations.

To keep a balance between allowing TOS violating content to be flagged, and discouraging false flags, social sites who use such a system limit strikes to only instances when the circumstances indicate the flag was deliberate rather than an error, and users are warned before flagging content that repeated false flagging can result in said strikes and penalties.

Youtube could even add ineligibility for participation in the Youtube Heroes program as one of its penalties.

- There is no try, only do | HBR Talk 348 - March 27, 2025

- The absolute STATE of PROPAGANDA | HBR Talk 347 - March 20, 2025

- Hostile, aggressive feminism | HBR Talk 346 - March 13, 2025